Turbopack

Turbopack is an incremental bundler optimized for JavaScript and TypeScript, written in Rust.

This document aims to cover documentation for developing/debugging Turbopack. For information on using Turbopack for Next.js applications, see the Next.js documentation.

What to read

If this is your first time setting up a development environment, recommend that you read Setup dev environment and How to run tests first.

The implementation of Next.js features in Turbopack is covered in the Next.js integration section, and How Turbopack works covers high level documentation for how turbopack’s internal works.

Lastly, API references contains all of Turbopack-related package’s api documentation based on rustdoc.

Setting up a development environment

What to install

You should have the following tools installed on your system.

- rust

- node.js - We recommend a version manager like fnm

- pnpm - We recommend using Corepack

- Your favorite development editor - Recommend editors that can use Rust-analyzer

- Other toolchains such as xcode or gcc may be required depending on the system environment and can be discussed in #team-turbopack.

Nextpack

Turbopack currently utilizes code from two repositories, Next.js and Turbo, so it can sometimes be cumbersome to incorporate changes from either repository. Nextpack allows you to work on the two repositories for development as linked, single workspace. We recommend using Nextpack unless you have a specific reason not to.

You can read about how you can set up a development environment in the Nextpack repository.

Upgrading Turbopack in Next.js

For Next.js to reflect the changes you made on the Turbo side, you need to release a new version. Turbopack is not currently releasing packages to crates.io, releasing new versions based on git tags instead. By default, we release a nightly version every day, but if you need a new change you can create a tag manually. The format of the tag is turbopack-YYMMDD.n. When creating PR for Next.js, you should use Nextpack’s next-link script to properly include the runtime code used by Turbopack.

How to run tests

Next.js

Most Turbopack testing is done through integration tests in Next.js. Currently (as of February 2024), Turbopack does not pass all of the Next.js test cases. When running tests, some tests are not performed because of the distinction between Turbopack and traditional tests.

Turbopack test manifestcontains a list of tests that either pass or fail, and test runner selectively runs passing test on the CI.

In general, you can use CI to run full test cases and check for regressions, and run individual tests on your local machine in the following ways.

// Run single test file

TURBOPACK=1 pnpm test-dev test/e2e/edge-can-use-wasm-files/index.test.ts

// Run specific test case in the specified file

TURBOPACK=1 pnpm test-dev test/e2e/edge-can-use-wasm-files/index.test.ts -t "middleware can use wasm files lists the necessary wasm bindings in the manifest"

Few environment variable can control test’s behavior.

// Run Next.js in Turbopack mode. Required when running Turbopack tests.

TURBOPACK=1

// Specifies the location of the next-swc napi binding binary.

// Allows to use locally changed turbopack binaries in certain app (i.e vercel/front) without replacing

// whole next.js installation

__INTERNAL_CUSTOM_TURBOPACK_BINDINGS=${absolute_path_to_node_bindings}

Updating test snapshot

Most of test cases have snapshot will have branching like below:

if (process.env.TURBOPACK) {

expect(some).toMatchInlineSnapshot(..)

} else {

expect(some).toMatchInlineSnapshot(..)

}

This allows you to test different output snapshots per bundler without changing snapshots. When there are changes in those snapshots, test should ran against webpack & Turbopack both to ensure both snapshots are reflecting changes.

Check the status of tests

There are few places we can track the status of tests.

Turbopack

Turbo have some tests for the Turbopack as well. It is recommended to use nextest to run the tests.

Test flakiness

Next.js and Turbopack’s test both can be flaky. In most cases, retry CI couple of times would resolve the issue. If not and unsure, check in #team-turbopack or #coord-next-turbopack

Tracing Turbopack

Turbopack comes with a tracing feature that allows to keep track of executions and their runtime and memory consumption. This is useful to debug and optimize the performance of your application.

Logging

Inside of Next.js one can enable tracing with the NEXT_TURBOPACK_TRACING environment variable.

It supports the following special preset values:

1oroverview: Basic user level tracing is enabled. (This is the only preset available in a published Next.js release)next: Same asoverview, but with lower-leveldebugandtracelogs for Next.js’s own cratesturbopack: Same asnext, but with lower-leveldebugandtracelogs for Turbopack’s own cratesturbo-tasks: Same asturbopack, but also with verbose tracing of every Turbo-Engine function execution.

Alternatively, any syntax supported by tracing_subscriber::filter::EnvFilter can be used.

For the more detailed tracing a custom Next.js build is required. See Setup for more information how to create one.

With this environment variable, Next.js will write a .next/trace.log file with the tracing information in a binary format.

Viewer

To visualize the content of .next/trace.log, the turbo-trace-viewer can be used.

This tool connects the a WebSocket on port 57475 on localhost to connect the the trace-server.

One can start the trace-server with the following command:

cargo run --bin turbo-trace-server --release -- /path/to/your/trace.log

The trace viewer allows to switch between multiple different visualization modes:

- Aggregated spans: Spans with the same name in the same parent are grouped together.

- Individual spans: Every span is shown individually.

- … in order: Spans are shown in the order they occur.

- … by value: Spans are sorted and spans with the largest value are shown first.

- Bottom-up view: Instead of showing the total value, the self value is shown.

And there different value modes:

- Duration: The CPU time of each span is shown.

- Allocated Memory: How much memory was allocated during the span.

- Allocations: How many allocations were made during the span.

- Deallocated Memory: How much memory was deallocated during the span.

- Persistently allocated Memory: How much memory was allocated but not deallocated during the span. It survives the span.

Turbo Tasks: Concepts

Introduction

The Turbo Tasks library provides is an incremental computation system that uses macros and types to automate the caching process. Externally, it is known as the Turbo engine.

It draws inspiration from webpack, Salsa (which powers Rust-Analyzer and Ruff), Parcel, the Rust compiler’s query system, Adapton, and many others.

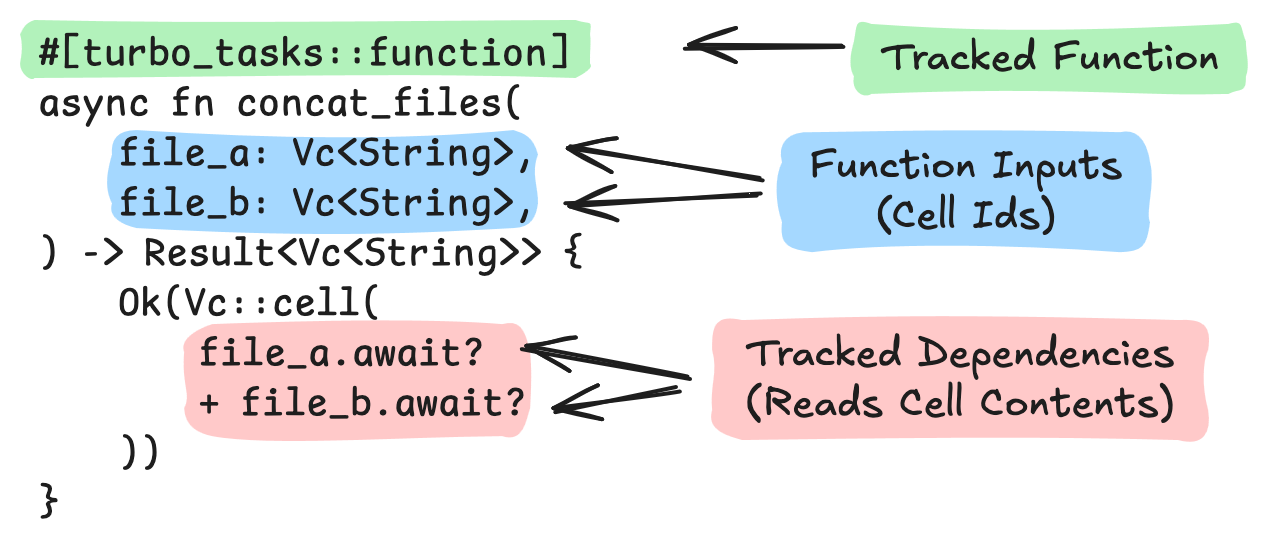

Functions and Tasks

#[turbo_tasks::function]s are memoized functions within the build process. An instance of a function with arguments is called a task. They are responsible for:

- Tracking Dependencies: Each task keeps track of its dependencies to determine when a recomputation is necessary. Dependencies are tracked when a

Vc<T>(Value Cell) is awaited. - Recomputing Changes: When a dependency changes, the affected tasks are automatically recomputed.

- Parallel Execution: Every task is spawned as a Tokio task, which uses Tokio’s multithreaded work-stealing executor.

Task Graph

All tasks and their dependencies form a task graph.

This graph is crucial for invalidation propagation. When a task is invalidated, the changes propagate through the graph, triggering rebuilds where necessary.

Incremental Builds

The Turbo engine employs a bottom-up approach for incremental builds.

By rebuilding invalidated tasks, only the parts of the graph affected by changes are rebuilt, leaving untouched parts intact. No work is done for unchanged parts.

Turbo Tasks: Tasks and Functions

A Task is an instance of a function along with its arguments. These tasks are the fundamental units of work within the build system.

Defining Tasks

Tasks are defined using Rust functions annotated with the #[turbo_tasks::function] macro.

#![allow(unused)]

fn main() {

#[turbo_tasks::function]

fn add(a: i32, b: i32) -> Vc<Something> {

// Task implementation goes here...

}

}- Tasks can be implemented as either a synchronous or asynchronous function.

- Arguments must implement the

TaskInputtrait. Usually these are primitives or types wrapped inVc<T>. For details, see the task inputs documentation. - The external signature of a task always returns

Vc<T>. This can be thought of as a lazily-executed promise to a value of typeT. - Generics (type or lifetime parameters) are not supported in task functions.

External Signature Rewriting

The #[turbo_tasks::function] macro rewrites the arguments and return values of functions. The rewritten function signature is referred to as the “external signature”.

Argument Rewrite Rule

-

Function arguments with the

ResolvedVc<T>type are rewritten toVc<T>.- The value cell is automatically resolved when the function is called. This reduces the work needed to convert between

Vc<T>andResolvedVc<T>types. - This rewrite applies for

ResolvedVc<T>types nested inside ofOption<ResolvedVc<T>>andVec<ResolvedVc<T>>. For more details, refer to theFromTaskInputtrait.

- The value cell is automatically resolved when the function is called. This reduces the work needed to convert between

-

Method arguments of

&selfare rewritten toself: Vc<Self>.

Return Type Rewrite Rules

- A return type of

Result<Vc<T>>is rewritten intoVc<T>.- The

Result<Vc<T>>return type allows for idiomatic use of the?operator inside of task functions.

- The

- A function with no return type is rewritten to return

Vc<()>instead of(). - The

impl Future<Output = Vc<T>>type implicitly returned by an async function is flattened into theVc<T>type, which represents a lazy and re-executable version of theFuture.

Some of this logic is represented by the TaskOutput trait and its associated Return type.

External Signature Example

As an example, the method

#![allow(unused)]

fn main() {

#[turbo_tasks::function]

async fn foo(

&self,

a: i32,

b: Vc<i32>,

c: ResolvedVc<i32>,

d: Option<Vec<ResolvedVc<i32>>>,

) -> Result<Vc<i32>> {

// ...

}

}will have an external signature of

#![allow(unused)]

fn main() {

fn foo(

self: Vc<Self>, // was: &self

a: i32,

b: Vc<i32>,

c: Vc<i32>, // was: ResolvedVc<i32>

d: Option<Vec<Vc<i32>>>, // was: Option<Vec<ResolvedVc<i32>>>

) -> Vc<i32>; // was: impl Future<Output = Result<Vc<i32>>>

}Methods with a Self Argument

Tasks can be methods associated with a value or a trait implementation using the arbitrary_self_types nightly compiler feature.

Inherent Implementations

#![allow(unused)]

fn main() {

#[turbo_tasks::value_impl]

impl Something {

#[turbo_tasks::function]

fn method(self: Vc<Self>, a: i32) -> Vc<SomethingElse> {

// Receives the full `Vc<Self>` type, which we must `.await` to get a

// `ReadRef<Self>`.

vdbg!(self.await?.some_field);

// The `Vc` type is useful for calling other methods declared on

// `Vc<Self>`, e.g.:

self.method_resolved(a)

}

#[turbo_tasks::function]

fn method_resolved(self: ResolvedVc<Self>, a: i32) -> Vc<SomethingElse> {

// Same as above, but receives a `ResolvedVc`, which can be `.await`ed

// to a `ReadRef` or dereferenced (implicitly or with `*`) to `Vc`.

vdbg!(self.await?.some_field);

// The `ResolvedVc<Self>` type can be used to call other methods

// declared on `Vc<Self>`, e.g.:

self.method_ref(a)

}

#[turbo_tasks::function]

fn method_ref(&self, a: i32) -> Vc<SomethingElse> {

// As a convenience, receives the fully resolved version of `self`. This

// does not require `.await`ing to read.

//

// It can access fields on the struct/enum and call methods declared on

// `Self`, but it cannot call other methods declared on `Vc<Self>`

// (without cloning the value and re-wrapping it in a `Vc`).

Vc::cell(SomethingElse::new(self.some_field, a))

}

}

}-

Declaration Location: The methods are defined on

Vc<T>(i.e.Vc<Something>::methodandVc<Something>::method2), not on the inner type. -

&selfSyntactic Sugar: The&selfargument of a#[turbo_tasks::function]implicitly reads the value fromself: Vc<Self>. -

External Signature Rewriting: All of the signature rewrite rules apply here.

selfcan beResolvedVc<T>.asyncandResult<Vc<T>>return types are supported.

Trait Implementations

#![allow(unused)]

fn main() {

#[turbo_tasks::value_impl]

impl Trait for Something {

#[turbo_tasks::function]

fn method(self: Vc<Self>, a: i32) -> Vc<SomethingElse> {

// Trait method implementation...

//

// `self: ResolvedVc<Self>` and `&self` are also valid argument types!

}

}

}For traits, only the external signature (after rewriting) must align with the trait definition.

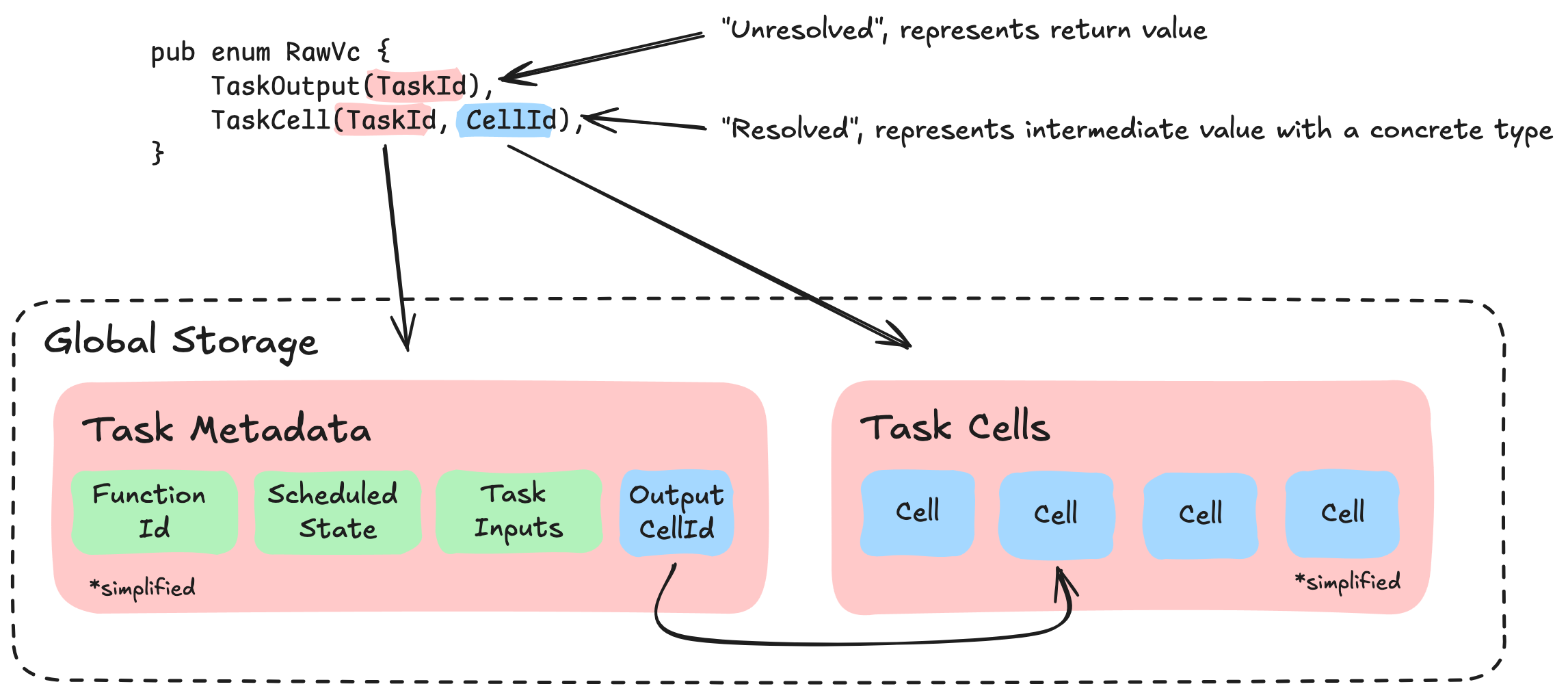

Turbo Tasks: Value Cells

Value Cells (the Vc<T> type) represent the pending result of a computation, similar to a cell in a spreadsheet. When a Vc’s contents change, the change is propagated by invalidating dependent tasks.

The contents (T) of a Vc<T> are always a VcValueType. These types are created using the #[turbo_tasks::value] macro.

Understanding Cells

A Cell is a storage location for data associated with a task. Cells provide:

- Immutability: Once a value is stored in a cell, it becomes immutable until that task is re-executed.

- Recomputability: If invalidated or cache evicted, a cell’s contents can be re-computed by re-executing its associated task.

- Dependency Tracking: When a cell’s contents are read (with

.await), the reading task is marked as dependent on the cell.

Cells are stored in arrays associated with the task that constructed them. A Vc can either point to a specific cell (this is a “resolved” cell), or the return value of a function (this is an “unresolved” cell).

Constructing a Cell

Most types using the #[turbo_tasks::value] macro are given a .cell() method. This method returns a Vc of the type.

Transparent wrapper types that use #[turbo_tasks::value(transparent)] cannot define methods on their wrapped type, so instead the Vc::cell function can be used to construct these types.

Updating a Cell

Every time a task runs, its cells are re-constructed.

When .cell() or Vc::cell is called, the value is compared to the previous execution’s using PartialEq. If it differs, the cell is updated, and all dependent tasks are invalidated. This behavior can be overridden to always invalidate using the cell = "new" argument.

Because cells are keyed by a combination of their type and construction order, task functions should have a deterministic execution order. A function with inconsistent ordering may result in wasted work by invalidating additional cells, though it will still give correct results.

You should use types with deterministic behavior such as IndexMap or BTreeMap in place of types like HashMap (which gives randomized iteration order).

Reading from Cells

To read the value from a cell, Vc<T> implements IntoFuture:

#![allow(unused)]

fn main() {

let vc: Vc<T> = ...;

let value: ReadRef<T> = vc.await?;

}A ReadRef<T> represents a reference-counted snapshot of a cell’s value at a given point in time.

Vcs must be awaited because we may need to wait for the associated task to finish executing. This operation may fail.

Reading from a Vc registers the current task as a dependent of the cell, meaning the task will be re-computed if the cell’s value changes.

Eventual Consistency

Because turbo_tasks is [eventually consistent], two adjacent .awaits of the same Vc<T> may return different values. If this happens, the task will eventually be invalidated and re-executed by a strongly consistent root task.

Tasks affected by a read inconsistency can return errors. These errors will be discarded by the strongly consistent root task. Tasks should never panic.

Currently, all inconsistent tasks are polled to completion. Future versions of the turbo_tasks library may drop tasks that have been identified as inconsistent after some time. As non-root tasks should not perform side-effects, this should be safe, though it may introduce some issues with cross-process resource management.

Defining Values

Values intended for storage in cells must be defined with the #[turbo_tasks::value] attribute:

- Comparison: By default, values implement

PartialEq + Eqfor comparison.- Opt-out with

#[turbo_tasks::value(eq = "none")]. - For custom comparison logic, use

#[turbo_tasks::value(eq = "manual")].

- Opt-out with

- Serialization: Values also implement

Serialize + Deserializefor persistent caching.- Opt-out with

#[turbo_tasks::value(serialization = "none")].

- Opt-out with

- Tracing: Values implement

TraceRawVcsto facilitate the discovery process of nestedVcs.- This may be used for tracing garbage collection in the future.

- Debug Printing: Values implement

ValueDebugFormatfor debug printing, resolving nestedVcs to their values.- Debug-print

Vctypes with thevdbg!(...)macro.

- Debug-print

To opt-out of certain requirements for fields within a struct, use the following attributes:

#[turbo_tasks(debug_ignore)]: Skips the field for debug printing.#[turbo_tasks(trace_ignore)]: Skips the field for tracing nestedVcs. It must not have nestedVcs to be correct. This is useful for fields containing foreign types that can not implementVcValueType.

Code Example

Here’s an example of defining a value for use in a cell:

#![allow(unused)]

fn main() {

#[turbo_tasks::value]

struct MyValue {

// Fields go here...

}

}In this example, MyValue is a struct that can be stored in a cell.

Enforcing Cell Resolution

As mentioned earlier, a Vc may either be “resolved” or “unresolved”:

- Resolved: Points to a specific cell constructed within a task.

- Unresolved: Points to the return value of a task (a function with a specific set of arguments). This is a lazily evaluated pointer to a resolved cell within the task.

Internally, a Vc may have either representation, but sometimes you want to enforce that a Vc is either resolved:

-

Equality: When performing equality comparisons between

Vcs, it’s important to ensure that both sides are resolved, so that you are comparing equivalent representations ofVcs. All task arguments are automatically resolved before function execution so that task memoization can compare arguments by cell id. -

Resolved

VcValueTypes: In the future, all fields inVcValueTypes will be required to useResolvedVcinstead ofVc. This will facilitate more accurate equality comparisons.

The ResolvedVc Type

ResolvedVc<T> is a subtype of Vc<T> that enforces resolution statically with types. It implements Deref<Target = Vc<T>> and behaves similarly to Vc types.

Constructing a ResolvedVc

ResolvedVcs can be constructed using generated .resolved_cell() methods or with the ResolvedVc::cell() function (for transparent wrapper types).

A Vc can be implicitly converted to a ResolvedVc by using a ResolvedVc type in a #[turbo_tasks::function] argument via the external signature rewriting rules.

Vcs can be be explicitly converted to ResolvedVcs using .to_resolved().await?. This may require waiting on the task to finish executing.

Reading a ResolvedVc

Even though a Vc may be resolved as a ResolvedVc, we must still use .await? to read it’s value, as the value could be invalidated or cache-evicted.

Calls

Introduction

In Turbo-Engine, calls to turbo task functions are central to the operation of the system. This section explains how calls work within the Turbo-Engine framework, including the concepts of unresolved and resolved Vc<T>.

Making Calls

#![allow(unused)]

fn main() {

let vc: Vc<T> = some_turbo_tasks_function();

}When you invoke a Turbo-Tasks Function, it returns a Vc<T>, which is a pointer to the task’s return value. Here’s what happens during a call:

- Immediate Return: The call returns instantly, and the task is queued for computation.

- Unresolved Vc: The returned

Vcis a so called “unresolved”Vc, meaning it points to a return value that may not yet be computed.

Resolved vs Unresolved Vcs

- Unresolved Vc: An “unresolved”

Vcpoints to a return value of a Task - Resolved Vc: A “resolved”

Vcpoints to a cell computed by a Task.

Resolving Vcs

To compare Vc values or ensure they are ready for use, you can resolve them:

#![allow(unused)]

fn main() {

let resolved = vc.resolve().await?;

}- Reading: It’s not mandatory to resolve

Vcs before reading them. ReadingVcs will first resolve it and then read the cell value. - Dependency Registration: Resolving a

Vcregisters the current task as a dependent of the task output, ensuring that the task is recomputed if the return value changes. - Usage as Key: Resolving

Vcs is mandatory when using them as keys ofHashMaporHashSetfor the cell identity.

Importance of Resolution

Resolution is crucial for the memoization process:

- Memoization:

Vcs used as arguments are identified by their pointer. - Automatic Resolution: Unresolved

Vcs passed to turbo task functions are resolved automatically, ensuring that functions receive resolvedVcs for memoization.- Intermediate Task: An intermediate task is created internally to handle the resolution of arguments.

Performance Considerations

While automatic resolution is convenient, it introduces the slight overhead of the intermediate Task:

- Manual Resolution: In performance-critical scenarios, resolving

Vcs manually may be beneficial to reduce overhead.

Code Example

Here’s an example of calling a turbo task function and resolving its Vc:

#![allow(unused)]

fn main() {

// Calling a turbo task function

let vc: Vc<MyType> = compute_something();

// Resolving the Vc for direct comparison or use

let resolved_vc: MyType = vc.resolve().await?;

}Task inputs

Introduction

In Turbo-Engine, task functions are the cornerstone of the build system. To ensure efficient and correct builds, only specific types of arguments, known as TaskInputs, can be passed to these functions. This guide details the requirements and types of task inputs permissible in Turbo-Engine.

Requirements for Task Inputs

Serialization

For persistent caching to function effectively, arguments must be serializable.

This ensures that the state of a task can be saved and restored without loss of fidelity.

This means the implementation of Serialize and Deserialize traits is necessary for task inputs.

HashMap Key

Arguments are also used as keys in a HashMap, necessitating the implementation of PartialEq, Eq, and Hash traits.

Types of Task Inputs

Vc<T>

A Vc<T> is a pointer to a cell and serves as a “pass by reference” argument:

- Memoization: It’s important to note that

Vc<T>is keyed by pointer for memoization purposes. Identical values in different cells are treated as distinct. - Singleton Pattern: To ensure memoization efficiency, the Singleton pattern can be employed to guarantee that identical values yield the same

Vc. For more info see Singleton Pattern Guide.

Deriving TaskInput

Structs or enums can be made into task inputs by deriving TaskInput:

#![allow(unused)]

fn main() {

#[derive(TaskInput)]

struct MyStruct {

// Fields go here...

}

}- Pass by Value: Derived

TaskInputtypes are passed by value, which involves cloning the value multiple times. It’s recommended to ensure that these types are inexpensive to clone.

Deprecated: Value<T>

The Value<T> type is a legacy way to define task inputs and should no longer be used.

Future Consideration: Arc<T>

The use of Arc<T> where T implements TaskInput is under consideration for future implementation.

Conclusion

Understanding and utilizing the correct task inputs is vital for the smooth operation of Turbo-Engine. By adhering to the guidelines outlined in this document, developers can ensure that their tasks are set up for success, leading to faster and more accurate builds.

Traits

#[turbo_tasks::value_trait]

pub trait MyTrait {

#[turbo_tasks::function]

fn method(self: Vc<Self>, a: i32) -> Vc<Something>;

// External signature: fn method(self: Vc<Self>, a: i32) -> Vc<Something>

#[turbo_tasks::function]

async fn method2(&self, a: i32) -> Result<Vc<Something>> {

// Default implementation

}

// A normal trait item, not a turbo-task

fn normal(&self) -> SomethingElse;

}

#[turbo_tasks::value_impl]

pub trait OtherTrait: MyTrait + ValueToString {

// ...

}

#[turbo_tasks::value_trait]annotation is required to define traits in Turbo-Engine.- All items annotated with

#[turbo_tasks::function]are Turbo-Engine functions.selfargument is alwaysVc<Self>.

- Default implementation are supported and behave similarly to defining Turbo-Engine functions.

Vc<Box<dyn MyTrait>>is a Vc to a trait.- A

Vc<Box<dyn MyTrait>>can be turned into aTraitRef<Box<dyn MyTrait>>to call non-turbotask functions. See reading below.

Implementing traits

#[turbo_tasks::value_impl]

impl MyTrait for Something {

#[turbo_tasks::function]

fn method(self: Vc<Self>, a: i32) -> Vc<Something> {

// Implementation

}

#[turbo_tasks::function]

fn method2(&self, a: i32) -> Vc<Something> {

// Implementation

}

fn normal(&self) -> SomethingElse {

// Implementation

}

}

Upcasting

Vcs can be upcasted to a trait Vc:

let something_vc: Vc<Something> = ...;

let trait_vc: Vc<Box<dyn MyTrait>> = Vc::upcast(something_vc);

Downcasting

Vcs can also be downcasted to a concrete type of a subtrait:

let trait_vc: Vc<Box<dyn MyTrait>> = ...;

if let Some(something_vc) = Vc::try_downcast_type::<Something>(trait_vc).await? {

// ...

}

let trait_vc: Vc<Box<dyn MyTrait>> = ...;

if let Some(something_vc) = Vc::try_downcast::<Box<dyn OtherTrait>>(trait_vc).await? {

// ...

}

There is a compile-time check that the source trait is implemented by the target trait/type.

Note: This will resolve the Vc and have similar implications as .to_resolved().await?.

Sidecasting

let trait_vc: Vc<Box<dyn MyTrait>> = ...;

if let Some(something_vc) = Vc::try_sidecast::<Box<dyn UnrelatedTrait>>(trait_vc).await? {

// ...

}

This won’t do any compile-time checks, so downcasting should be preferred if possible.

Note: This will resolve the Vc and have similar implications as .to_resolved().await?.

Reading

Trait object Vcs can be read by converting them to a TraitRef, which allows non-turbo-tasks functions defined on the trait to be called.

use turbo_tasks::IntoTraitRef;

let trait_vc: Vc<Box<dyn MyTrait>> = ...;

let trait_ref: TraitRef<Box<dyn MyTrait>> = trait_vc.into_trait_ref().await?;

trait_ref.non_turbo_tasks_function();

Patterns

Singleton

The Singleton pattern is a design pattern that restricts the instantiation of a class to a single instance. This is useful for a system that requires a single, global point of access to a particular resource.

In the context of Turbo-Engine, the Singleton pattern can be used to intern a value into a Vc. This ensures that for a single value, there is exactly one resolved Vc. This allows Vcs to be compared for equality and used as keys in hashmaps or hashsets.

Usage

To use the Singleton pattern in Turbo-Engine:

- Make the

.cell()method private (Use#[turbo_tasks::value]instead of#[turbo_tasks::value(shared)]). - Only call the

.cell()method in a Turbo-Engine function which acts as a constructor. - The constructor arguments act as a key for the constructor task which ensures that the same value is always celled in the same task.

- Keep in mind that only resolved

Vcsare equal. UnresolvedVcsmight not be equal to each other.

Example

Here is an example of how to implement the Singleton pattern in Turbo-Engine:

#[turbo_tasks::value]

struct SingletonString {

value: String,

}

#[turbo_tasks::value_impl]

impl SingletonString {

#[turbo_tasks::function]

fn new(value: String) -> Vc<SingletonString> {

Self { value }.cell()

}

}

#[test]

fn test_singleton() {

let a1 = SingletonString::new("a".to_string()).resolve().await?;

let a2 = SingletonString::new("a".to_string()).resolve().await?;

let b = SingletonString::new("b".to_string()).resolve().await?;

assert_eq!(a1, a2); // Resolved Vcs are equal

assert_neq!(a1, b);

let set = HashSet::from([a1, a2, b]);

assert_eq!(set.len(), 2); // Only two different values

}

In this example, SingletonString is a struct that contains a single String value. The new function acts as a constructor for SingletonString, ensuring that the same string value is always celled in the same task.

Filesystem

For correct cache invalidation it’s important to not read from external things in a Turbo-Engine function, without taking care of invalidations of that external thing.

To allow using filesystem operations correctly, Turbo-Engines provides a filesystem API which includes file watching for invalidation.

It’s important to only use the filesystem API to access the filesystem.

API

Constructing

// Create a filesystem

let fs = DiskFileSystem::new(...);

// Get the root path, returns a Vc<FileSystemPath>

let fs_path = fs.root();

Navigating

// Access a sub path (error when leaving the root)

let fs_path = fs_path.join("sub/directory/file.txt".to_string());

// Append to the filename (file.txt.bak)

fs_path.append(".bak".to_string());

// Append to the basename (file.copy.txt)

fs_path.append_to_stem(".copy".to_string());

// Change the extension (file.raw)

fs_path.with_extension("raw".to_string());

// Access a sub path (returns None when leaving the root)

fs_path.try_join("../../file.txt".to_string());

// Access a sub path (returns None when leaving the current path)

fs_path.try_join_inside("file.txt".to_string());

// Get the parent directory as Vc<FileSystemPath>

fs_path.parent();

// Get all glob matches

fs_path.read_glob(...);

Metadata access

// Gets the filesystem

fs_path.fs();

// Gets the extension

fs_path.extension();

// Gets the basename

fs_path.file_stem();

Comparing

// Is the path inside of the other path

fs_path.is_inside(other_fs_path);

// Is the path inside or equal to the other path

fs_path.is_inside_or_equal(other_fs_path);

Reading

// Returns the type of the path (file, directory, symlink, ...)

// This is based on read_dir of the parent directory and much more efficient than .metadata()

fs_path.get_type();

// Reads the file content

fs_path.read();

// Reads a symlink content

fs_path.read_link()

// Reads the file content as JSON

fs_path.read_json();

// Read a directory. Returns a map of new Vc<FileSystemPath>s

fs_path.read_dir();

// Gets a Vc<Completion> that changes when the file content changes

fs_path.track();

// Reads metadata of a file

fs_path.metadata();

// Resolves all symlinks in the path and returns the real path

fs_path.realpath();

// Resolves all symlinks in the path and returns the real path and a list of symlinks

fs_path.real_path_with_symlinks();

Writing

// Write the file content

fs_path.write(...);

// Write the file content

fs_path.write_link(...);

Environment

Network

Advanced

Serialization

Registry

Registration of value types, tait types and functions is required for serialization (Persistent Caching).

This is handled by the turbo-tasks-build create.

Required setup in every crate using Turbo-Engine:

// build.rs

use turbo_tasks_build::generate_register;

fn main() {

generate_register();

}

// src/lib.rs

// ...

pub fn register() {

// Call register for all dependencies

turbo_tasks::register();

// Run the generated registration code for items of the own crate

include!(concat!(env!("OUT_DIR"), "/register.rs"));

}

In test cases using Turbo Engine:

include!(concat!(env!("OUT_DIR"), "/register_test_<test-case>.rs"));

Why?

During deserialization Turbo Engine need to look up the deserialization code needed from the registry since type information is not statically known.

State

Turbo-Engine requires all function to be pure and deterministic, but sometimes one need to manage some state during the execution. State is ok as long as functions that read the state are correctly invalidated when the state changes.

To help with that Turbo Engine comes with a State<T> struct that automatically handles invalidation.

Usage

#[turbo_tasks::value]

struct SomeValue {

immutable_value: i32,

mutable_value: State<i32>,

}

#[turbo_tasks::value_impl]

impl SomeValue {

#[turbo_tasks::function]

fn new(immutable_value: i32, mutable_value: i32) -> Vc<SomeValue> {

Self {

immutable_value,

mutable_value: State::new(mutable_value),

}

.cell()

}

#[turbo_tasks::function]

fn get_mutable_value(&self) -> Vc<i32> {

// Reading the state will make the current task depend on the state

// Changing the state will invalidate `get_mutable_value`.

let value = self.mutable_value.get();

Vc::cell(value)

}

#[turbo_tasks::function]

fn method(&self) {

// Writing the state will invalidate all reader of the state

// But only if the state has been changed.

self.mutable_value.update_conditionally(|value: &mut i32| {

*old += 1;

// Return true when the value has been changed

true

});

// There are more ways to update the state:

// Sets a new value. Compared this value with the old value and only update if the value has changed.

// Requires `PartialEq` on the value type.

self.mutable_value.set(42);

// Sets a new value unconditionally. Always update the state and invalidate all readers.

// Doesn't require `PartialEq` on the value type.

self.mutable_value.set_unconditionally(42);

}

}

State and Persistent Caching

TODO (Not implemented yet)

State is persistent in the Persistent Cache.

Best Practice

Use State only when necessary. Prefer pure functions and immutable values.

Garbage collection

Determinism

All Turbo-Engine functions must be pure and deterministic. This means one should avoid using global state, static mutable values, randomness, or any other non-deterministic operations.

A notable mention is HashMap and HashSet. The order of iteration is not guaranteed and can change between runs. This can lead to non-deterministic builds.

Prefer IndexMap and IndexSet (or BTreeMap and BTreeSet) which have a deterministic order.

Architecture

This is a high level overview of Turbopack’s architecture.

Core Concepts

Turbopack has these guiding principles:

Incremental

- Avoid operations that require broad/complete graph traversal.

- Changes should only have local effects.

- Avoid depending on “global” information (information from the whole app).

- Avoid “one to many” dependencies (depending on one piece of information from many sources).

Deterministic

- Everything need to be deterministic.

- Avoid randomness, avoid depending on timing.

- Be careful with non-deterministic datastructures (like

HashMap,HashSet).

Lazy

- Avoid computing more information than needed for a given operation.

- Put extra indirection when needed.

- Use decorators to transform data on demand.

Extensible

- Use traits.

- Avoid depending on concrete implementations (avoid casting).

- Make behavior configurable by passing options.

Simple to use

- Use good defaults. Normal use case should work out of the box.

- Use presets for common use cases.

Layers

Turbopack models the user’s code as it travels through Turbopack in multiple ways, each of which can be thought of as a layer in the system below:

┌───────────────┬──────────────────────────────┐

│ Sources │ │

├───────────────┘ │

│ What "the user wrote" as │

│ application code │

└──────────────────────────────────────────────┘

┌───────────────┬──────────────────────────────┐

│ Modules │ │

├───────────────┘ │

│ The "compiler's understanding" of the │

│ application code │

└──────────────────────────────────────────────┘

┌───────────────┬──────────────────────────────┐

│ Output assets │ │

├───────────────┘ │

│ What the "target environment understands" as │

│ executable application │

└──────────────────────────────────────────────┘

Each layer is implemented as a decorator/wrapper around the previous layer in top-down order. In contrast to tools like webpack, where whole {source,module,chunk} graphs are built in serial, Turbopack builds lazily by successively wrapping an asset in each layer and building only what’s needed without blocking on everything in a particular phase.

Sources

Sources are content of code or files before they are analyzed and converted into Modules. They might be the original source file the user has written, or a virtual source code that was generated. They might also be transformed from other Sources, e. g. when using a preprocessor like SASS or webpack loaders.

Sources do not model references (the relationships between files like through import, sourceMappingURL, etc.).

Each Source has an identifier composed of file path, query, fragment, and other modifiers.

Modules

Modules are the result of parsing, transforming and analyzing Sources. They include references to other modules as analyzed.

References can be followed to traverse a subgraph of the module graph. They implicitly from the module graph by exposing references.

Each Module has an identifier composed of file path, query, fragment, and other modifiers.

Output asset

Output assets are artifacts that are understood by the target environment. They include references to other output assets.

Output assets are usually the result of transforming one or more modules to a given output format. This can be a very simple transformation like just copying the Source content (like with static assets like images), or a complex transformation like chunking and bundling modules (like with JavaScript or CSS).

Output assets can be emitted to disk or served from the dev server.

Each Output asset has a file path.

Example

graph TD

subgraph Source code

src ---> b.src.js[ ]

src ---> util

util ---> d.src.css[ ]

util ---> c.src.js[ ]

end

subgraph Module graph

a.ts ---> b.js

a.ts ---> c.js

b.js ---> c.js

c.js ---> d.css

c.js ---> e.js[ ]

b.js ---> f.js[ ]

b.js -.-> b.src.js

d.css -.-> d.src.css

end

subgraph Output graph

main.js --> chunk.js

main.js --> chunk2.js[ ]

main.js -.-> a.ts

main.js -.-> b.js

main.js -.-> c.js

end

Output format

An output format changes how a module is run in a specific target environment. The output format is chosen based on target environment and the input module.

graph LR

Browser & Node.js ---> css_chunking[CSS chunking]

Browser & Node.js ---> static_asset[Static asset]

Browser & Node.js ---> js_chunking[JS chunking]

Node.js ---> Rebasing

vercel_nodejs[Vercel Node.js] ---> nft.json

bundler[Bundler or module-native target] -.-> Modules

In the future, Turbopack should support producing modules as an output format, to be used by other bundlers or runtimes that natively support ESM modules.

Chunking

Chunking is the process that decides which modules are placed into which bundles, and the relationship between these bundles.

For this process a few intermediate concepts are used:

ChunkingContext: A context trait which controls the process.ChunkItem: A derived object from aModulewhich combines the module with theChunkingContext.ChunkableModule: A trait which defines how a specificModulecan be converted into aChunkItem.ChunkableModuleReference: A trait which defines how a specificModuleReferencewill interact with chunking.ChunkType: A trait which defines how to create aChunkfromChunkItems.Chunk: A trait which represents a bundle ofChunkItems.

graph TB

convert{{convert to chunk item}}

ty{{get chunk type}}

create{{create chunk}}

EcmascriptModule -. has trait .-> Module

CssModule -. has trait .-> Module

Module -. has trait .-> ChunkableModule

ChunkableModule === convert

ChunkingContext --- convert

convert ==> ChunkItem

ChunkItem ==== ty

EcmascriptChunkType -. has trait ..-> ChunkType

CssChunkType -. has trait ..-> ChunkType

ty ==> ChunkType

ChunkType ==== create

create ==> Chunk

EcmascriptChunk -. has trait ..-> Chunk

CssChunk -. has trait ..-> Chunk

BrowserChunkingContext -. has trait .-> ChunkingContext

NodeJsChunkingContext -. has trait .-> ChunkingContext

A Module need to implement the ChunkableModule trait to be considered for chunking. All references of these module that should be considered for chunking need to implement the ChunkableModuleReference trait.

The chunking algorithm walks the module graph following these chunkable references to find all modules that should be bundled. These modules are converted into ChunkItems and a special algorithm decides which ChunkItems are placed into which Chunks.

One factor is for example the ChunkType of each ChunkItem since only ChunkItems with the same ChunkType can be placed into the same Chunk. Other factors are directory structure and chunk size based.

Once the ChunkItems that should be placed together are found, a Chunk is created for each group of ChunkItems by using the method on ChunkType.

An OutputAsset is then created for each Chunk which is eventually emitted to disk. The output format decides how a Chunk is transformed into an OutputAsset. These OutputAssets that are loaded together are called a chunk group.

The chunking also collects all modules into a AvailableModules struct which is e. g. used for nested chunk groups to avoid duplicating modules that are already in the parent chunk group.

Mixed Graph

Not only can Turbopack travel between these different layers, but it can do so across and between different environments.

- It’s possible to transitions between Output Formats

- For example, starting in ecmascript but referencing static assets with

new URL('./foo.png')

- For example, starting in ecmascript but referencing static assets with

- Embed/Reference Output Assets in Modules

- e.g. embedding base64 data url of a chunk inside another chunk

- SSR Page references client chunks for hydration

- embed urls to client-side chunks into server-generated html

- references output assets from a different chunking root from a different environment

- Client Component referenced from Server Component

- Client component wrapper (Server Component) references client chunks of a client component

All of this is made possible by having a single graph that models all source, modules, and output assets across all different environments.

Crates

Layers

We can split the crates into the following layers:

graph TB cli_utils[CLI Utils] Tools --> Facade CLI --> Facade & cli_utils Facade --> Output & Modules & Utils & Core Modules & Output --> Core & Utils Utils --> Core

Core

There crates are the core of turbopack, providing the basic traits and types. This e. g. handles issue reporting, resolving and environments.

turbopack-core: Core types and traitsturbopack-resolve: Specific resolving implementations

Utils

These crates provide utility functions and types that are used throughout the project.

turbopack-swc-utils: SWC helpers that are shared between ecmascript and cssturbopack-node: Node.js pool processing, webpack loaders, postcss transformturbopack-env: .env file loading

Modules

These crates provide implementations of various module types.

turbopack-ecmascript: The ecmascript module type (JavaScript, TypeScript, JSX), Tree Shakingturbopack-ecmascript-plugins: Common ecmascript transforms (styled-components, styled-jsx, relay, emotion, etc.)turbopack-css: The CSS module type (CSS, CSS Modules, SWC, lightningcss)turbopack-json: The JSON module typeturbopack-static: The static module type (images, fonts, etc.)turbopack-image: Image processing (metadata, optimization, blur placeholder)turbopack-wasm: The WebAssembly module typeturbopack-mdx: The mdx module type

Output

These crates provide implementations of various output formats.

turbopack-browser: Output format for the browser target, HMRturbopack-nodejs: Output format for the Node.js targetturbopack-ecmascript-runtime: The runtime code for bundlesturbopack-ecmascript-hmr-protocol: The Rust side of the HMR protocol

Facade

These crates provide the facade to use Turbopack. They combine the different modules and output formats into presets and handle the default module rules.

turbopack-binding: A crate reexporting all Turbopack crates for consumption in other projectsturbopack: The main facade bundling all module types and rules when to apply them, presets for resolving and module processing

CLI

These crates provide the standalong CLI and the dev server.

turbopack-cli: The Turbopack CLIturbopack-dev-server: The on demand dev server implementation

CLI Utils

These crates provide utility functions for the CLI.

turbopack-cli-utils: Helper functions for CLI toolingturbopack-trace-utils: Helper function for tracing

Tools

These crates provide tools to test, benchmark and trace Turbopack.

turbopack-bench: The Turbopack benchmarkturbopack-tests: Integration tests for Turbopackturbopack-create-test-app: A CLI to create a test application with configurable number of modulesturbopack-swc-ast-explorer: A CLI tool to explore the SWC ASTturbopack-test-utils: Helpers for testingturbopack-trace-server: A CLI to open a trace file and serve it in the browser

@next/swc

The @next/swc is a binding that makes features written in Rust available to the JavaScript runtime. This binding contains not only Turbopack, but also other features needed by Next.js (SWC, LightningCSS, etc.).

Package hierarchies

The figure below shows the dependencies between packages at a high level.

flowchart TD

C(next-custom-transforms) --> A(napi)

C(next-custom-transforms) --> B(wasm)

D(next-core) --> A(napi)

E(next-build) --> A(napi)

F(next-api) --> A(napi)

C(next-custom-transforms) --> D

D(next-core) --> F(next-api)

D(next-core) --> E(next-build)

next-custom-transforms: provides next-swc specific SWC transform visitors. Turbopack, and the plain next-swc bindings (transform) use these transforms. Since this is a bottom package can be imported in any place (turbopack / next-swc / wasm), it is important package do not contain specific dependencies. For example, using Turbopack’s VC in this package will cause build failures to wasm bindings.next-core: Implements Turbopack features for the next.js core functionality. This is also the place where Turbopack-specific transform providers (implementingCustomTransformer) lives, which wraps swc’s transformer in thenext-custom-transforms.next-api: Binding interface to the next.js provides a proper next.js functionality usingnext-core.napi/wasm: The actual binding interfaces, napi for the node.js and wasm for the wasm. Note wasm bindings cannot import packages using turbopack’s feature.

How to add new swc transforms

- Implement a new visitor in

next-custom-transforms. It is highly encouraged to useVisitMutinstead ofFoldfor the performance reasons. - Implement a new

CustomTransformerunderpackages/next-swc/crates/next-core/src/next_shared/transformsto make a Turbopack ecma transform plugin, then adjust corresponding rules inpackages/next-swc/crates/next-core/src/(next_client|next_server)/context.rs.

napi bindings feature matrix

Due to platform differences napi bindings selectively enables supported features. See below tables for the currently enabled features.

| arch\platform | Linux(gnu) | Linux(musl) | Darwin | Win32 |

|---|---|---|---|---|

| ia32 | a,b,d,e | |||

| x64 | a,b,d,e,f | a,b,d,e,f | a,b,d,e,f | a,b,d,e,f |

| aarch64 | a,d,e,f | a,d,e,f | a,b,d,e,f | a,b,c,e |

- a:

turbo_tasks_malloc, - b:

turbo_tasks_malloc_custom_allocator, - c:

native-tls, - d:

rustls-tls, - e:

image-extended(webp) - f:

plugin

Napi-rs

To generate bindings for the node.js, @next/swc relies on napi-rs. Check napi packages for the actual implementation.

Turbopack, turbopack-binding, and next-swc

Since Turbopack currently has features split across multiple packages and no official SDK, we’ve created a single entry point package, turbopack-binding, to use Turbopack features in next-swc. Turbopack-binding also reexports SWC, which helps to reduce the version difference between next-swc and turbopack. However, there are currently some places that use direct dependencies for macros and other issues.

Turbopack-binding is a package that is only responsible for simple reexports, allowing you to access each package by feature. The features currently in use in next-swc are roughly as follows.

SWC

__swc_core_binding_napi: Features for using napi with swc__swc_core_serde: Serde serializable ast__swc_core_binding_napi_plugin_*: swc wasm plugin support__swc_transform_modularize_imports: Modularize imports custom transform__swc_transform_relay: Relay custom transform__swc_core_next_core: Features required for next-core package

Turbo

__turbo: Core feature to enable other turbo features.__turbo_tasks_*: Features related to Turbo task.__turbo_tasks_malloc_*: Custom memory allocator features.

Turbopack

__turbopack: Core feature to enable other turbopack features.__turbopack_build: Implements functionality for production builds__turbopack_cli_utils: Formatting utilities for building CLIs around turbopack__turbopack_core: Implements most of Turbopack’s core structs and functionality. Used by many Turbopack crates__turbopack_dev: Implements development-time builds__turbopack_ecmascript: Ecmascript parse, transform, analysis__turbopack_ecmascript_plugin: Entrypoint for the custom swc transforms__turbopack_ecmascript_hmr_protocol__turbopack_ecmascript_runtime: Runtime code implementing chunk loading, hmr, etc.__turbopack_env: Support forprocess.envanddotenv__turbopack_static: Support for static assets__turbopack_image_*: Native image decoding / encoding support. Some codecs have separate features (avif, etcs)__turbopack_node: Evaluates JavaScript code from Rust via a Node.js process pool__turbopack_trace_utils

Other features

__feature_auto_hash_map__feature_node_file_trace: Node file trace__feature_mdx_rs: Mdx compilation support

Next.rs api

Contexts

TBD

Alias and resolve plugins

Turbopack supports aliasing imports similar to Webpack’s ‘alias’ config. It is being applied when Turbopack runs its module resolvers.

Setting up alias

To set the alias, use the import_map in each context’s ResolveOptionContext. ResolveOptionContext also has fallback_import_map which will be applied when a request to resolve cannot find any corresponding imports.

let resolve_option_context = ResolveOptionContext {

..

import_map: Some(next_client_import_map),

fallback_import_map: Some(next_client_fallback_import_map),

}

These internal mapping options will be applied at the end among any other resolve options. For example, if user have tsconfig’s compilerOptions.paths alias, it’ll take precedence.

ImportMap can be either 1:1 match (AliasPattern::Exact) or wildcard matching (AliasPattern::Wildcard). Check AliasPattern for more details.

React

Next.js internally aliases react imports based on context. Reference Webpack config and Turbopack for how it’s configured.

ResolvePlugin

ResolveOptionContext have one another option plugins, can affect context’s resolve result. A plugin implements ResolvePlugin can replace resolved results. Currently resolve plugin only supports after_resolve, which will be invoked after full resolve completes.

after_resolve provides some information to determine specific resolve, most notably request param contains full Request itself.

async fn after_resolve(

&self,

fs_path: Vc<FileSystemPath>,

file_path: Vc<FileSystemPath>,

reference_type: Value<ReferenceType>,

request: Vc<Request>,

) -> Result<Vc<ResolveResultOption>>

Plugin can return its own ResolveResult or return nothing (None). Retuning None will makes original resolve result being honored, otherwise original resolve result will be overridden. In Next.js resolve plugins are being used for triggering side effect by emitting an issue if certain context imports not allowed module (InvalidImportResolvePlugin), or replace a single import into per-context aware imports (NextNodeSharedRuntimeResolvePlugin).

Custom transforms

React server components

API references

Turbopack uses code comments and rustdoc to document the API. The full package documentation can be found here.

The list below are packages and brief descriptions that you may want to refer to when first approaching the code.

- napi: NAPI bindings to make Turbopack and SWC’s features available in JS code in Next.js

- next-custom-transforms: Collection of SWC transform visitor implementations for features provided by Next.js

- next_api: Linking the interfaces and data structures of the NAPI bindings with the actual Turbopack’s next.js feature implementation interfaces

- next_core: Support and implementation of Next.js features in Turbopack

Links

The links below are additional materials such as conference recording. Some of links are not publicly available yet. Ask in team channel if you don’t have access.

Writing documentation for Turbopack

Turbopack has two main types of documentation. One is a description of the code and APIs, and the other is a high-level description of the overall behavior. Since there is no way to integrate with rustdoc yet, we deploy mdbook and rustdoc together with a script.

The source for the documentation is available at here.

Write a general overview document

Files written in markdown format in packages/next-swc/docs/src can be read by mdbook(Like the page you’re looking at now). Our configuration includes mermaid support to include diagrams. See architecture how it looks like.

Write a rustdoc comment

If you write a documentation comment in your code, it will be included in the documentation generated by rustdoc. The full index can be found here, and individual packages can be read via https://turbopack-rust-docs.vercel.sh/rustdoc/${pkg_name}/index.html.

Build the documentation

The scripts/deploy-turbopack-docs.sh script bundles the mdbook and rustdoc and deploys them. You can use it locally as a build script because it won’t run the deployment if it doesn’t have a token. The script runs a rustdoc build followed by an mdbook build, and then copies the output of the rustdoc build under mdbook/rustdoc.

To locally build the documentation, ensure these are installed

- Rust compiler

- mdbook

- mdbook-mermaid

./scripts/deploy-turbopack-docs.sh

open ./target/mdbook/index.html

or

// note you can't check rustdoc links when running devserver

mdbook serve --dest-dir $(pwd)/target/mdbook ./packages/next-swc/docs